I’ve been pitched by numerous flow battery companies in my days as a deeptech/climatetech investor. The promise of the technology has always been:

- Long cycle life (the number of charge-discharge cycles you can do before the performance degrades)

- Easy to scale: you want 2x the storage? Just get 2x the electrolyte!

- Low fire risk: most flow batteries use water-based electrolytes which won’t ignite in the air (the way the lithium in lithium-ion batteries do)

Despite compelling benefits, this category never achieved the level of success or scale as lithium-ion did. This was due in part to a variety of technological limitations (poor energy density, lower cycle efficiency, concerns around the amount of Vanadium-containing electrolyte “lying around” in a system, etc). But, the main cause was the breath-taking progress lithium-ion batteries have made in cost, energy density, and safety driven first by consumer electronics demand and then by electric vehicle demand.

This C&EN article covers the renewed optimism the flow battery world is experiencing as market interest in the technology revitalizes.

My hot-take🔥: while technological improvements play a part, once again, what is driving the flow battery market is what’s happening in lithium-ion world. There simply is too much demand for energy storage and growing uncertainty about the ability of lithium-ion to handle it in the face of the conflict between the West and China (the leading supplier of lithium ion batteries) and supply chain concerns about critical minerals for lithium ion batteries (like nickel and cobalt). Grid storage players have to look elsewhere. (Electric vehicle companies would probably like to but do not have the option!)

Considering the importance of grid energy storage in electrifying our world and onboarding new renewable generation, I think having and seeing more options is a good thing. So I, too, am optimistic here 👍🏻

Note: this is the first in a (hopefully ongoing) series of posts called “What I’m Reading” where I’ll share & comment on an interesting article I’ve come across!

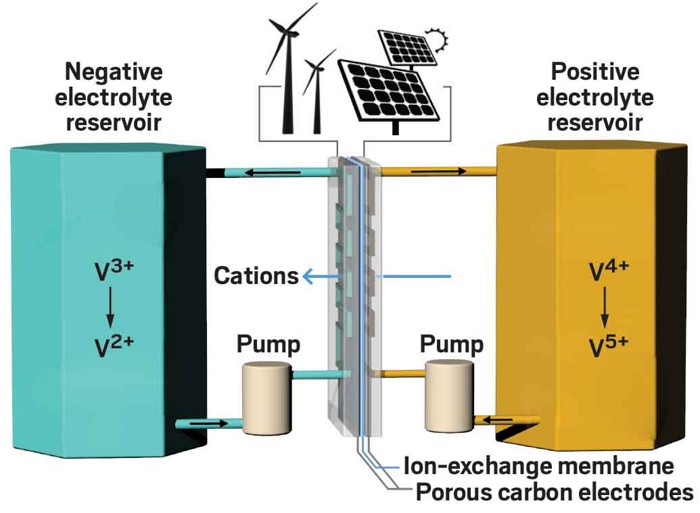

Redox flow batteries have a reputation of being second best. Less energy intensive and slower to charge and discharge than their lithium-ion cousins, they fail to meet the performance requirements of snazzy, mainstream applications, such as cars and cell phones. There’s no such thing as a flow-battery Tesla.

But the companies at the International Flow Battery Forum in Prague in late June were adamant that flow batteries are now cheaper, more reliable, and safer than lithium ion in a growing number of real-world stationary energy applications. Flow-battery makers say their technology—and not lithium ion—should be the first choice for capturing excess renewable energy and returning it when the sun is not out and the wind is not blowing.

Flow batteries, the forgotten energy storage device

Alex Scott | C&EN