Historically, Intel has (1) designed and (2) manufactured its chips that it sells (primarily into computer and server systems). It prided itself on having the most advanced (1) designs and (2) manufacturing technology, keeping both close to its chest.

In the late 90s/00s, semiconductor companies increasingly embraced the “fabless model”, whereby they would only do the (1) design while outsourcing the manufacturing to foundries like TSMC. This made it much easier and less expensive to build up a burgeoning chip business and is the secret to the success of semiconductor giants like NVIDIA and Qualcomm.

Companies like Intel scoffed at this, arguing that the combination of (1) design and (2) manufacturing gave their products an advantage, one that they used to achieve a dominant position in the computing chip segment. And, it’s an argument which underpins why they have never made a significant effort in becoming a contract manufacturer — after all, if part of your technological magic is the (2) manufacturing, why give it to anyone else?

The success of TSMC has brought a lot of questions about Intel’s advantage in manufacturing and, given recent announcements by Intel and the US’s CHIPS Act, a renewed focus on actually becoming a contract manufacturer to the world’s leading chip designers.

While much of the attention has been paid to the manufacturing prowess rivalry and the geopolitical reasons behind this, I think the real reason Intel has to make the foundry business work is simple: their biggest customers are all becoming chip designers.

While a lot of laptops and desktops and servers are still sold in the traditional fashion, the reality is more and more of the server market is being dominated by a handful of hyperscale data center operators like Amazon, Google, Meta/Facebook, and Microsoft, companies that have historically been able to obtain the best prices from Intel because of their volume. But, in recent years, in the chase for better and better performance and cost and power consumption, they have begun designing their own chips adapted to their own systems (as this latest Google announcement for Google’s own ARM-based server chips shows).

Are these chips as good as Intel’s across every dimension? Almost certainly not. It’s hard to overtake a company like Intel’s decades of design prowess and market insight. But, they don’t have to be. They only have to be better at the specific use case Google / Microsoft / Amazon / etc need it to be for.

And, in that regard, that leaves Intel with really only one option: it has to make the foundry business work, or it risks losing not just the revenue from (1) designing a data center chip, but from the (2) manufacturing as well.

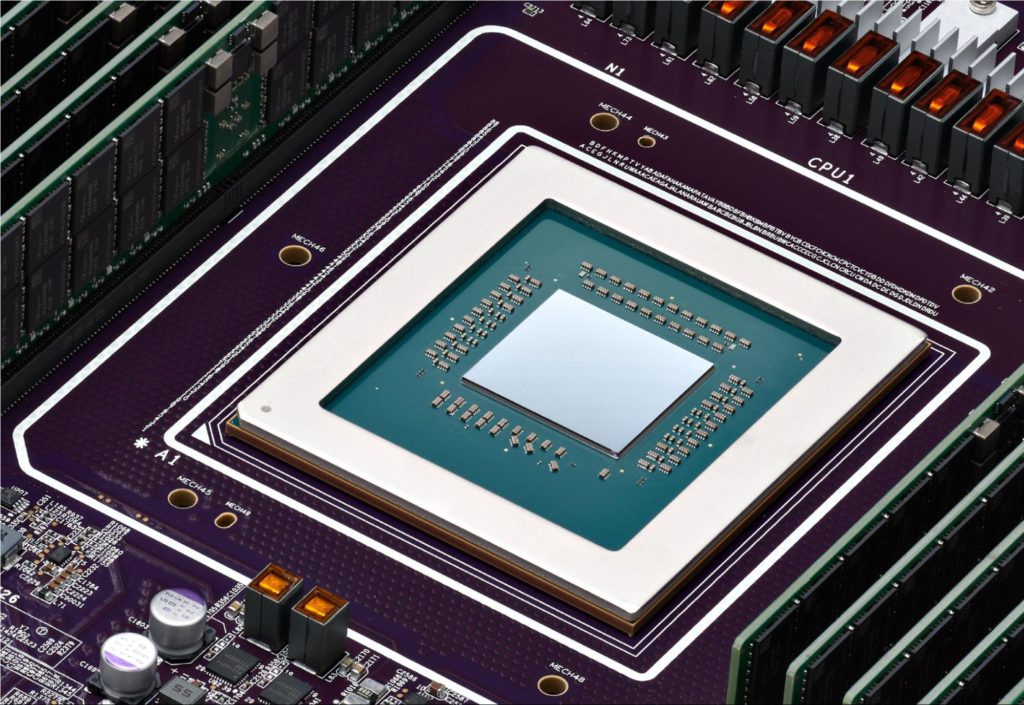

Axion processors combine Google’s silicon expertise with Arm’s highest performing CPU cores to deliver instances with up to 30% better performance than the fastest general-purpose Arm-based instances available in the cloud today, up to 50% better performance and up to 60% better energy-efficiency than comparable current-generation x86-based instances1. That’s why we’ve already started deploying Google services like BigTable, Spanner, BigQuery, Blobstore, Pub/Sub, Google Earth Engine, and the YouTube Ads platform on current generation Arm-based servers and plan to deploy and scale these services and more on Axion soon.

Introducing Google Axion Processors, our new Arm-based CPUs

Amin Vahdat | Google Blog