The Dunning-Kruger effect encapsulates something many of us feel familiar with: that the least intelligent oftentimes assume they know more than they actually do. Wrap that sentiment in an academic paper written by two professors at an Ivy League institution and throw in some charts and statistics and you’ve got a easily citable piece of trivia to make yourself feel smarter than the person who you just caught commenting on something they know nothing about.

Well, according to this fascinating blog post (HT: Eric), we have it all wrong. The way that Dunning-Kruger constructed their statistical test was designed to always construct a positive relationship between skill and perceived ability.

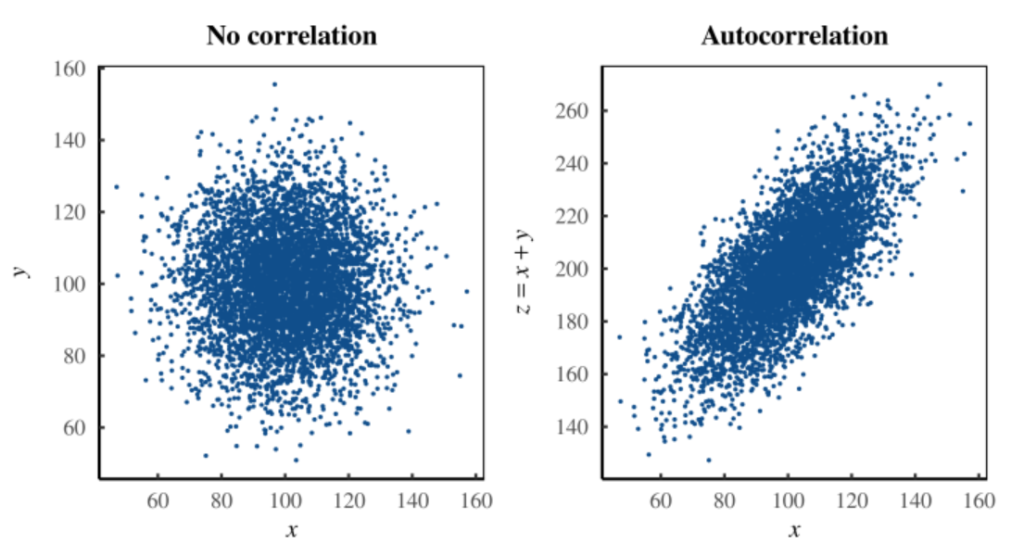

The whole thing is worth a read, but they showed that using completely randomly generated numbers (where there is no relationship between perceived ability and skill), you will always find a relationship between the “skill gap” (perceived ability – skill) and skill, or to put it more plainly,

With y being perceived ability and x being actual measured ability.

What you should be looking for is a relationship between perceived ability and measured ability (or directly between y and x) and when you do this with data, you find that the evidence for such a claim generally isn’t there!

In other words:

The Dunning-Kruger effect also emerges from data in which it shouldn’t. For instance, if you carefully craft random data so that it does not contain a Dunning-Kruger effect, you will still find the effect. The reason turns out to be embarrassingly simple: the Dunning-Kruger effect has nothing to do with human psychology. It is a statistical artifact — a stunning example of autocorrelation.

The Dunning-Kruger Effect is Autocorrelation

Blair Fix | Economics from the Top Down

Leave a Reply