Regardless of how you feel about Microsoft’s products, you have to appreciate the brilliance of their strategic “playbook”:

- Use the fact that Microsoft’s operating system/productivity software is used by almost everyone to identify key customer/partner needs

- Build a product which is usually only a second/third-best follower product but make sure it’s tied back to Microsoft’s products

- Take advantage of the time and market share that Microsoft’s channel influence, developer community, and product integration buys to invest in the new product with Microsoft’s massive budget until it achieves leadership

- If steps 1-3 fail to give Microsoft a dominant position, either exit (because the market is no longer important) or buy out a competitor

- Repeat

While the quality of Microsoft’s execution of each step can be called into question, I’d be hard pressed to find a better approach then this one, and I’m sure much of their success can be attributed to finding good ways to repeatedly follow this formula.

It’s for that reason that I’m completely bewildered by Microsoft’s consumer electronics business strategy. Instead of finding good ways to integrate the Zune, XBox, and Windows Mobile franchises together or with the Microsoft operating system “mothership” the way Microsoft did by integrating its enterprise software with Office or Internet Explorer with Windows, these three businesses largely stand apart from Microsoft’s home field (PC software) and even from each other.

This is problematic for two big reasons. First, because non-PC devices are outside of Microsoft’s usual playground, it’s not a surprise that Microsoft finds it difficult to expand into new territory. For Microsoft to succeed here, it needs to pull out all the stops and it’s shocking to me that a company with a stake in the ground in four key device areas (PCs, mobile phones, game consoles, and portable media players) would choose not to use one of the few advantages it has over its competitors.

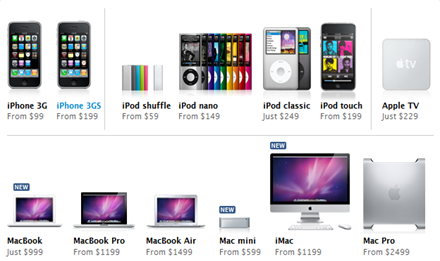

The second and most obvious (to consumers at least) is that Apple has not made this mistake. Apple’s iPhone and iPod Touch product lines are clear evolutions of their popular iPod MP3 players which integrate well with Apple’s iTunes computer software and iTunes online store. The entire Apple line-up, although each product is a unique entity, has a similar look and feel. The Safari browser that powers the Apple computer internet experience is, basically, the same that powers the iPhone and iPod Touch. Similarly, the same online store and software (iTunes) which lets iPods load themselves with music lets iPod Touches/iPhones load themselves with applications.

That neat little integrated package not only makes it easier for Apple consumers to use a product, but the coherent experience across the different devices gives customers even more of a reason to use and/or buy other Apple products.

Contrast that approach with Microsoft’s. Not only are the user interfaces and product designs for the Zune, XBox, and Windows Mobile completely different from one another, they don’t play well together at all. Applications that run on one device (be it the Zune HD, on a Windows PC, on an XBox, or on Windows Mobile) are unlikely to be able to run on any other. While one might be able to forgive this if it was just PC applications which had trouble being “ported” to Microsoft’s other devices (after all, apps that run on an Apple computer don’t work on the iPhone and vice versa), the devices that one would expect this to work well with (i.e. the Zune HD and the XBox because they’re both billed as gaming platforms, or the Zune HD and Windows Mobile because they’re both portable products) don’t. Their application development process doesn’t line up well. And, as far as I’m aware, the devices have completely separate application and content stores!

While recreating the Windows PC experience on three other devices is definitely overkill, I think, were I in Ballmer’s shoes, I would recommend a few simple recommendations which I think would dramatically benefit all of Microsoft’s product lines (and I promise they aren’t the standard Apple/Linux fanboy’s “build something prettier” or “go open source”):

- Centralize all application/content “marketplaces” – Apple is no internet genius. Yet, they figured out how to do this. I fail to see why Microsoft can’t do the same.

- Invest in building a common application runtime across all the devices – Nobody’s expecting a low-end Windows Mobile phone or a Zune HD to run Microsoft Excel, but to expect that little widgets or games should be able to work across all of Microsoft’s devices is not unreasonable, and would go a long way towards encouraging developers to develop for Microsoft’s new device platforms (if a program can run on just the Zune HD, there’s only so much revenue that a developer can take in, but if it can also run on the XBox and all Windows Mobile phones, then the revenue potential becomes much greater) and towards encouraging consumers to buy more Microsoft gear

- Find better ways to link Windows to each device – This can be as simple as building something like iTunes to simplify device management and content streaming, but I have yet to meet anyone with a Microsoft device who hasn’t complained about how poorly the devices work with PCs.

Thought this was interesting? Check out some of my other pieces on Tech industry