Randomized controlled trials (RCTs) are the “gold standard” in healthcare for proving a treatment works. And for good reason. A well-designed and well-powered (i.e., large enough) clinical trial establishes what is really due to a treatment as opposed to another factor (e.g., luck, reversion to the mean, patient selection, etc.), and it’s a good thing that drug regulation is tied to successful trial results.

But, there’s one wrinkle. Randomized controlled trials are not reality.

RCTs are tightly controlled, where only specific patients (those fulfilling specific “inclusion criteria”) are allowed to participate. Follow-up is organized and adherence to protocol is tightly tracked. Typically, related medical care is also provided free of cost.

This is exactly what you want from a scientific and patient volunteer safety perspective, but, as we all know, the real world is messier. In the real world:

- Physicians prescribe treatments to patients that don’t have to fit the exact inclusion criteria of the clinical trial. After all, many clinical trials exclude people who are extremely sick or who are children or pregnant.

- Patients may not take their designated treatment on time or in the right dose … and nobody finds out.

- Follow-up on side effects and progress is oftentimes random

- Cost and free time considerations may change how and when a patient comes in

- Physicians also have greater choice in the real world. They only prescribe treatments they think will work, whereas in a RCT, you get the treatment you’ve been randomly assigned to.

These differences beg the question: just how different is the real world from an randomized controlled trial?

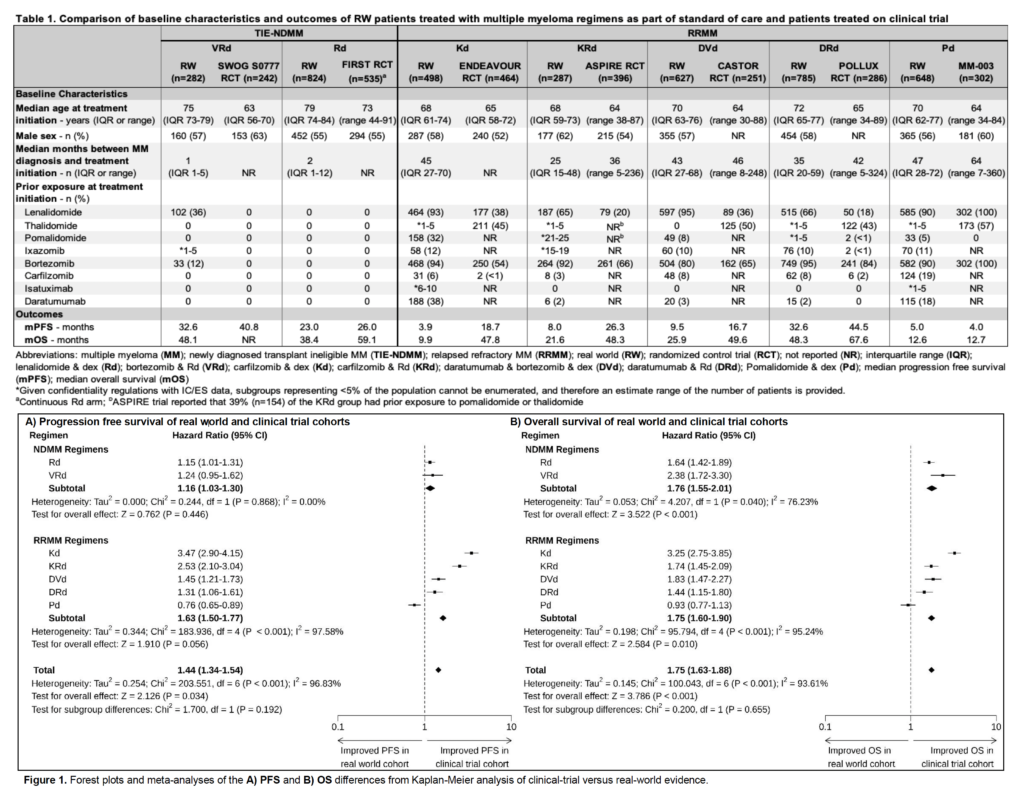

A group in Canada studied this question and presented their findings at the recent ASH (American Society of Hematology) meeting. The researchers looked at ~4,000 patients in Canada with multiple myeloma, a cancer with multiple treatment regimens that have been developed and approved, and used Canada’s national administrative database to track how they did after 7 different treatment regimes and compared it to published RCT results for each treatment.

The findings are eye-opening. While there is big variation from treatment to treatment, in general, real world effectiveness was significantly worse, by a wide margin, than efficacy published in randomized controlled trial (see table below).

While the safety profiles (as measured by the rate of “adverse events”) seemed similar between real world and RCT, real world patients did, in aggregate, 44% worse on progression free survival and 75% worse on overall survival when compared with their RCT counterparts!

The only treatment where the real world did better than the RCT was in a study where it’s likely the trial volunteers were much sicker than on average. (Note: that one of seven treatment regimes went the other way but the aggregate still is 40%+ worse shows you that some of the comparisons were vastly worse)

The lesson here is not that we should stop doing or listening to randomized controlled trials. After all, this study shows that they were reasonably good at predicting safety, not to mention that they continue to be our only real tool for establishing whether a treatment has real clinical value prior to giving it to the general public.

But this study imparts two key lessons for healthcare:

- Do not assume that the results you see in a clinical trial are what you will see in the real world. Different patient populations, resources, treatment adherence, and many other factors will impact what you see.

- Especially for treatments we expect to use with many people, real world monitoring studies are valuable in helping to calibrate expectations and, potentially, identify patient populations where a treatment is better or worse suited.

Leave a Reply