Republicans have declared a “war on Harvard” in recent months and one front of that is a request to the SEC to look at how Harvard’s massive endowment values illiquid assets like venture capital and private equity.

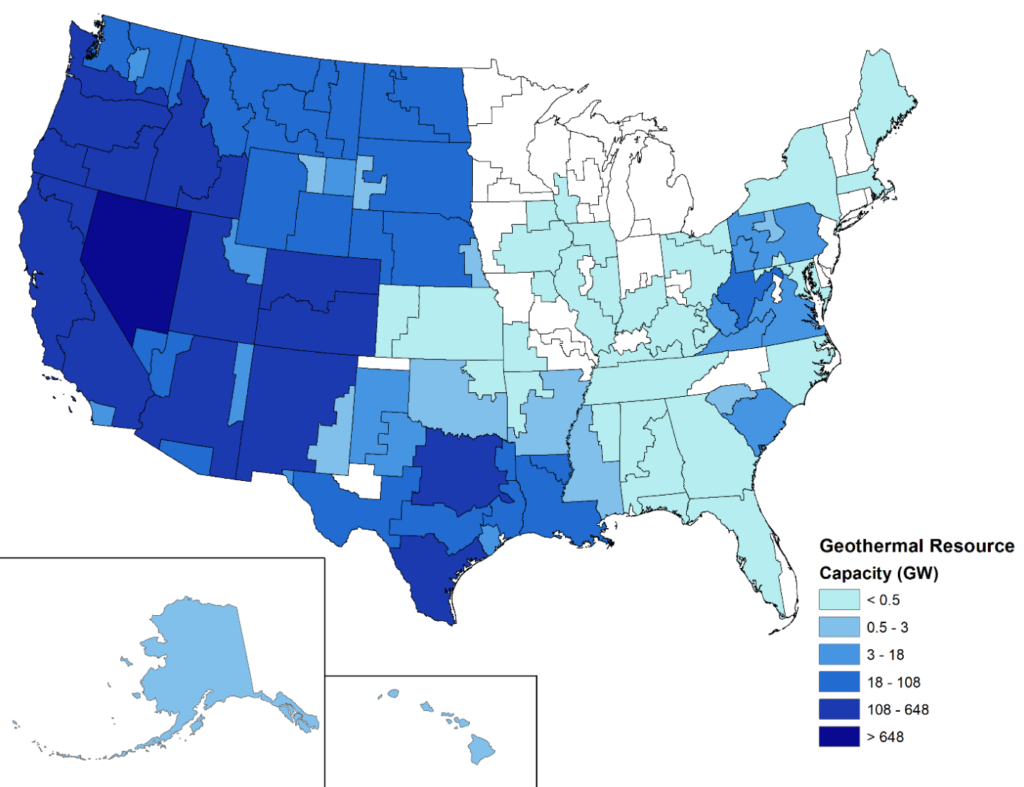

What’s fascinating is that in targeting Harvard in this way the Republicans may have declared war on Private Equity and Venture Capital in general. As their holdings (in privately held companies) are highly illiquid, it is considered accounting “standard practice” to simply ask the investment funds to provide “fair market” valuations of those assets.

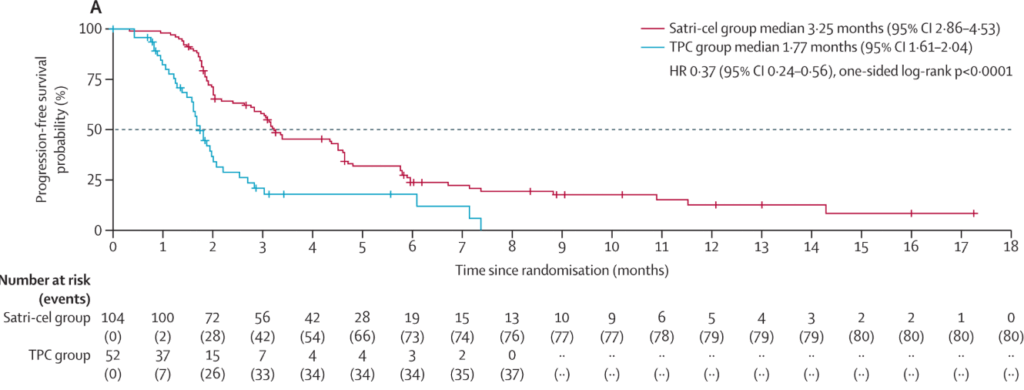

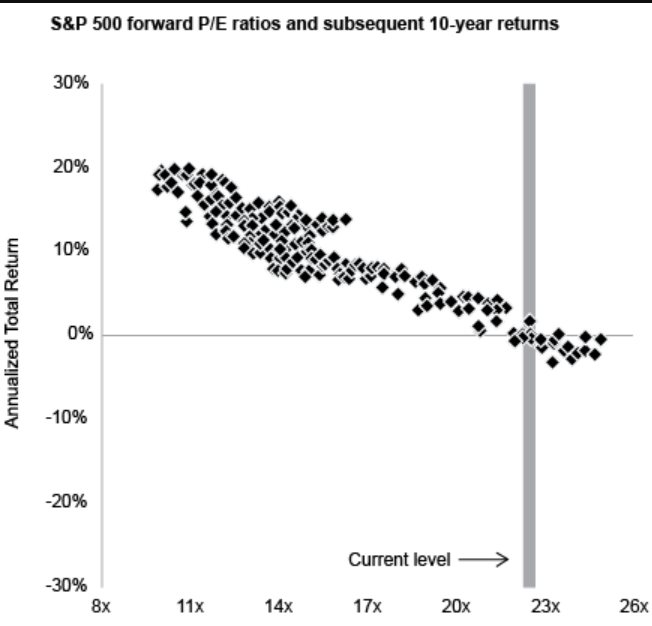

This is a practical necessity, as it is highly difficult to value these companies (which rarely trade and where even highly paid professionals miss the mark). But, it means that investment firms are allowed to “grade their own homework”, pretending that valuations for some companies are much higher than they actually have a right to be, resulting in quite a bit of “grade inflation” across the entire sector.

If Harvard is forced to re-value these according to a more objective standard — like the valuations of these assets according to a 409a valuation or a secondary transaction (where shares are sold without the company being involved) both of which artificially deflate prices — then it wouldn’t be a surprise to see significant “grade deflation” which could have major consequences for private capital:

- Less capital for private equity / venture capital: Many institutional investors (LPs) like private equity / venture capital in part because the “grade inflation” buffers the price turbulence that more liquid assets (like stocks) experience (so long as the long-term returns are good). Those investors will find private equity and venture capital less attractive if the current practices are replaced with something more like “grade deflation”

- A shift in investments from higher risk companies to more mature ones: If Private Equity / Venture Capital investments need to be graded on a harsher scale, they will be less likely to invest in higher risk companies (which are more likely to experience valuation changes under stricter methodologies) and more likely to invest in more mature companies with more predictable financials (ones that are closer to acting like publicly traded companies). This would be a blow to smaller and earlier stage companies.

The problem is investors are often allowed to keep using the reported NAV figures even if they know they are out of date or weren’t measured properly. In those scenarios, the accounting rules say an investor “shall consider whether an adjustment” is necessary. But the rules don’t require an investor to do anything more than consider it. There’s no outright prohibition on using the reported NAV even if the investor knows it’s completely unreasonable.

Private Equity Caught in Crosshairs of Elise Stefanik’s Attack on Harvard

Jonathan Weil | Wall Street Journal