A cab driver the other day went off on me with a rant about how new smartphone users were all smug, arrogant gadget snobs for using phones that did more than just make phone calls. “Why you gotta need more than just the phone?”, he asked.

While he was probably right on the money with the “smug”, “arrogant”, and “snob” part of the description of smartphone users (at least it accurately describes yours truly), I do think he’s ignoring a lot of the important changes which the smartphone revolution has made in the technology industry and, consequently, why so many of the industry’s venture capitalists and technology companies are investing so heavily in this direction. This post will be the first of two posts looking at what I think are the four big impacts of smartphones like the Blackberry and the iPhone on the broader technology landscape:

- It’s the software, stupid

- Look ma, no <insert other device here>

- Putting the carriers in their place

- Contextuality

I. It’s the software, stupid!

You can find possibly the greatest impact of the smartphone revolution in the very definition of smartphone: phones which can run rich operating systems and actual applications. As my belligerent cab-driver pointed out, the cellular phone revolution was originally about being able to talk to other people on the go. People bought phones based on network coverage, call quality, the weight of a phone, and other concerns primarily motivated by call usability.

Smartphones, however, change that. Instead of just making phone calls, they also do plenty of other things. While a lot of consumers focus their attention on how their phones now have touchscreens, built-in cameras, GPS, and motion-sensors, the magic change that I see is the ability to actually run programs.

Why do I say this software thing more significant than the other features which have made their ways on to the phone? There are a number of reasons for this, but the big idea is that the ability to run software makes smartphones look like mobile computers. We have seen this pan out in a number of ways:

- The potential uses for a mobile phone have exploded overnight. Whereas previously, they were pretty much limited to making phone calls, sending text messages/emails, playing music, and taking pictures, now they can be used to do things like play games, look up information, and even be used by doctors to help treat and diagnose patients. In the same way that a computer’s usefulness extends beyond what a manufacturer like Dell or HP or Apple have built into the hardware because of software, software opens up new possibilities for mobile phones in ways which we are only beginning to see.

- Phones can now be “updated”. Before, phones were simply replaced when they became outdated. Now, some users expect that a phone that they buy will be maintained even after new models are released. Case in point: Users threw a fit when Samsung decided not to allow users to update their Samsung Galaxy’s operating system to a new version of the Android operating system. Can you imagine 10 years ago users getting up in arms if Samsung didn’t ship a new 2 MP mini-camera to anyone who owned an earlier version of the phone which only had a 1 MP camera?

- An entire new software industry has emerged with its own standards and idiosyncrasies. About four decades ago, the rise of the computer created a brand new industry almost out of thin air. After all, think of all the wealth and enabled productivity that companies like Oracle, Microsoft, and Adobe have created over the past thirty years. There are early signs that a similar revolution is happening because of the rise of the smartphone. Entire fortunes have been created “out of thin air” as enterprising individuals and companies move to capture the potential software profits from creating software for the legions of iPhones and Android phones out there. What remains to be seen is whether or not the mobile software industry will end up looking more like the PC software industry, or whether or not the new operating systems and screen sizes and technologies will create something that looks more like a distant cousin of the first software revolution.

II. Look ma, no <insert other device here>

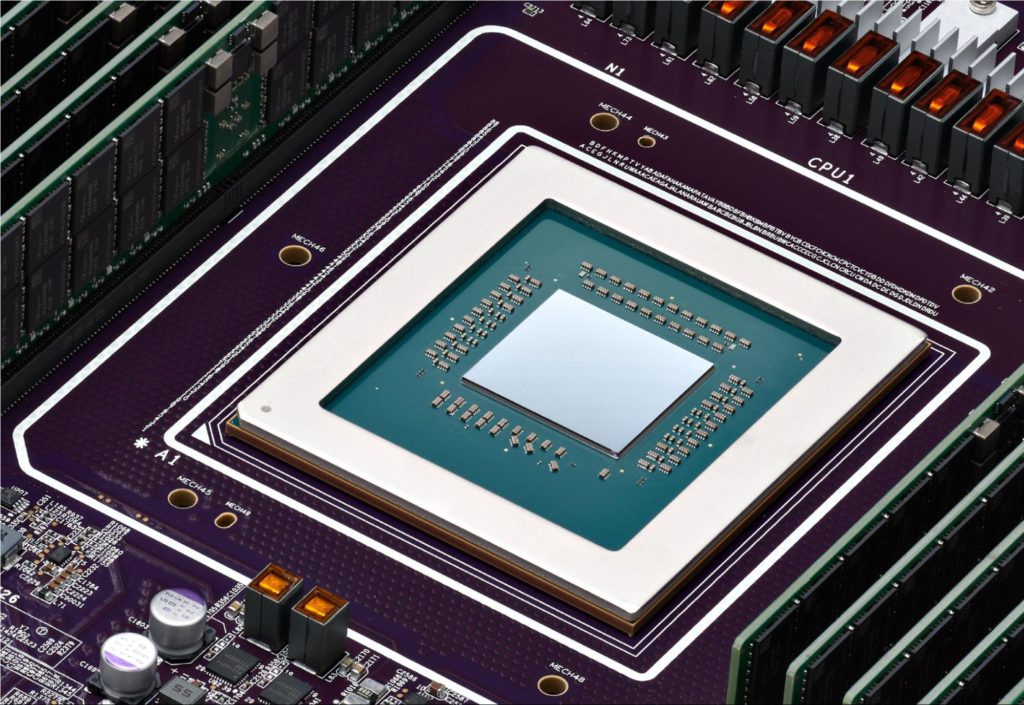

One of the most amazing consequences of Moore’s Law is that devices can quickly take on a heckuva lot more functionality then they used to. The smartphone is a perfect example of this Swiss-army knife mentality. The typical high-end smartphone today can:

- take pictures

- use GPS

- play movies

- play songs

- read articles/books

- find what direction its being pointed in

- sense motion

- record sounds

- run software

… not to mention receive and make phone calls and texts like a phone.

But, unlike cameras, GPS devices, portable media players, eReaders, compasses, Wii-motes, tape recorders, and computers, the phone is something you are likely to keep with you all day long. And, if you have a smartphone which can double as a camera, GPS, portable media player, eReaders, compass, Wii-mote, tape recorder, and computer all at once – tell me why you’re going to hold on to those other devices?

That is, of course, a dramatic oversimplification. After all, I have yet to see a phone which can match a dedicated camera’s image quality or a computer’s speed, screen size, and range of software, so there are definitely reasons you’d pick one of these devices over a smartphone. The point, however, isn’t that smartphones will make these other devices irrelevant, it is that they will disrupt these markets in exactly the way that Clayton Christensen described in his book The Innovator’s Dilemma, making business a whole lot harder for companies who are heavily invested in these other device categories. And make no mistake: we’re already seeing this happen as GPS companies are seeing lower prices and demand as smartphones take on more and more sophisticated functionality (heck, GPS makers like Garmin are even trying to get into the mobile phone business!). I wouldn’t be surprised if we soon see similar declines in the market growth rates and profitability for all sorts of other devices.

III. Putting the carriers in their place

Throughout most of the history of the phone industry, the carriers were the dominant power. Sure, enormous phone companies like Nokia, Samsung, and Motorola had some clout, but at the end of the day, especially in the US, everybody felt the crushing influence of the major wireless carriers.

In the US, the carriers regulated access to phones with subsidies. They controlled which functions were allowed. They controlled how many texts and phone calls you were able to make. When they did let you access the internet, they exerted strong influence on which websites you had access to and which ringtones/wallpapers/music you could download. In short, they managed the business to minimize costs and risks, and they did it because their government-granted monopolies (over the right to use wireless spectrum) and already-built networks made it impossible for a new guy to enter the market.

But this sorry state of affairs has already started to change with the advent of the smartphone. RIM’s Blackberry had started to affect the balance of power, but Apple’s iPhone really shook things up – precisely because users started demanding more than just a wireless service plan – they wanted a particular operating system with a particular internet experience and a particular set of applications – and, oh, it’s on AT&T? That’s not important, tell me more about the Apple part of it!

What’s more, the iPhone’s commercial success accelerated the change in consumer appetites. Smartphone users were now picking a wireless service provider not because of coverage or the cost of service or the special carrier-branded applications – that was all now secondary to the availability of the phone they wanted and what sort of applications and internet experience they could get over that phone. And much to the carriers’ dismay, the wireless carrier was becoming less like the gatekeeper who got to charge crazy prices because he/she controlled the keys to the walled garden and more like the dumb pipe that people connected to the web on their iPhone with.

Now, it would be an exaggeration to say that the carriers will necessarily turn into the “dumb pipes” that today’s internet service providers are (remember when everyone in the US used AOL?) as these large carriers are still largely immune to competitors. But, there are signs that the carriers are adapting to their new role. The once ultra-closed Verizon now allows Palm WebOS and Google Android devices to roam free on its network as a consequence of AT&T and T-Mobile offering devices from Apple and Google’s partners, respectively, and has even agreed to allow VOIP applications like Skype access to its network, something which jeopardizes their former core voice revenue stream.

As for the carriers, as they begin to see their influence slip over basic phone experience considerations, they will likely shift their focus to finding ways to better monetize all the traffic that is pouring through their networks. Whether this means finding a way to get a cut of the ad/virtual good/eCommerce revenue that’s flowing through or shifting how they charge for network access away from unlimited/“all you can eat” plans is unclear, but it will be interesting to see how this ecosystem evolves.

IV. Contextuality

There is no better price than the amazingly low price of free. And, in my humble opinion, it is that amazingly low price of free which has enabled web services to have such a high rate of adoption. Ask yourself, would services like Facebook and Google have grown nearly as fast without being free to use?

How does one provide compelling value to users for free? Before the age of the internet, the answer to that age-old question was simple: you either got a nice government subsidy, or you just didn’t. Thankfully, the advent of the internet allowed for an entirely new business model: providing services for free and still making a decent profit by using ads. While over-hyping of this business model led to the dot com crash in 2001 as countless websites found it pretty difficult to monetize their sites purely with ads, services like Google survived because they found that they could actually increase the value of the advertising on their pages not only because they had a ton of traffic, but because they could use the content on the page to find ads which visitors had a significantly higher probability of caring about.

The idea that context could be used to increase ad conversion rates (the percent of people who see an ad and actually end up buying) has spawned a whole new world of web startups and technologies which aim to find new ways to mine context to provide better ad targeting. Facebook is one such example of the use of social context (who your friends are, what your interests are, what your friends’ interests are) to serve more targeted ads.

So, where do smartphones fit in? There are two ways in which smartphones completely change the context-to-advertising dynamic:

- Location-based services: Your phone is a device which not only has a processor which can run software, but is also likely to have GPS built-in, and is something which you carry on your person at all hours of the day. What this means is that the phone not only know what apps/websites you’re using, it also knows where you are and if you’re on a vehicle (based on how fast you are moving) when you’re using them. If that doesn’t let a merchant figure out a way to send you a very relevant ad, I don’t know what will. The Yowza iPhone application is an example of how this might shape out in the future, where you can search for mobile coupons for local stores all on your phone.

- Augmented reality: In the same way that the GPS lets mobile applications do location-based services, the camera, compass, and GPS in a mobile phone lets mobile applications do something called augmented reality. The concept behind augmented reality (AR) is that, in the real world, you and I are only limited by what our five senses can perceive. If I see an ad for a book, I can only perceive what is on the advertisement. I don’t necessarily know much about how much it costs on Amazon.com or what my friends on Facebook have said about it. Of course, with a mobile phone, I could look up those things on the internet, but AR takes this a step further. Instead of merely looking something up on the internet, AR will actually overlay content and information on top of what you are seeing on your phone screen. One example of this is the ShopSavvy application for Android which allows you to scan product barcodes to find product review information and even information on pricing from online and other local stores! Google has taken this a step further with Google Goggles which can recognize pictures of landmarks, books, and even bottles of wine! For an advertiser or a store, the ability to embed additional content through AR technology is the ultimate in providing context but only to those people who want it. Forget finding the right balance between putting too much or too little information on an ad, use AR so that only the people who are interested will get the extra information.

Thought this was interesting? Check out some of my other pieces on Tech industry